On http://www.brussel.be/webcam-grote-markt you can find a webcam stream of the Grand Place in Brussels. When I saw this I was wondering if I could build a model to trace individual people and eventually track their behaviour while walking on one of the most touristy spots in Brussels.

I set up a website with TensorFlow.js using the coco-ssd model but was slightly dissapointed with the results. After doing some more research I read about transfer learning and decided to try and improve model.

I recorded several short clips of the webcam stream using ffmpeg.

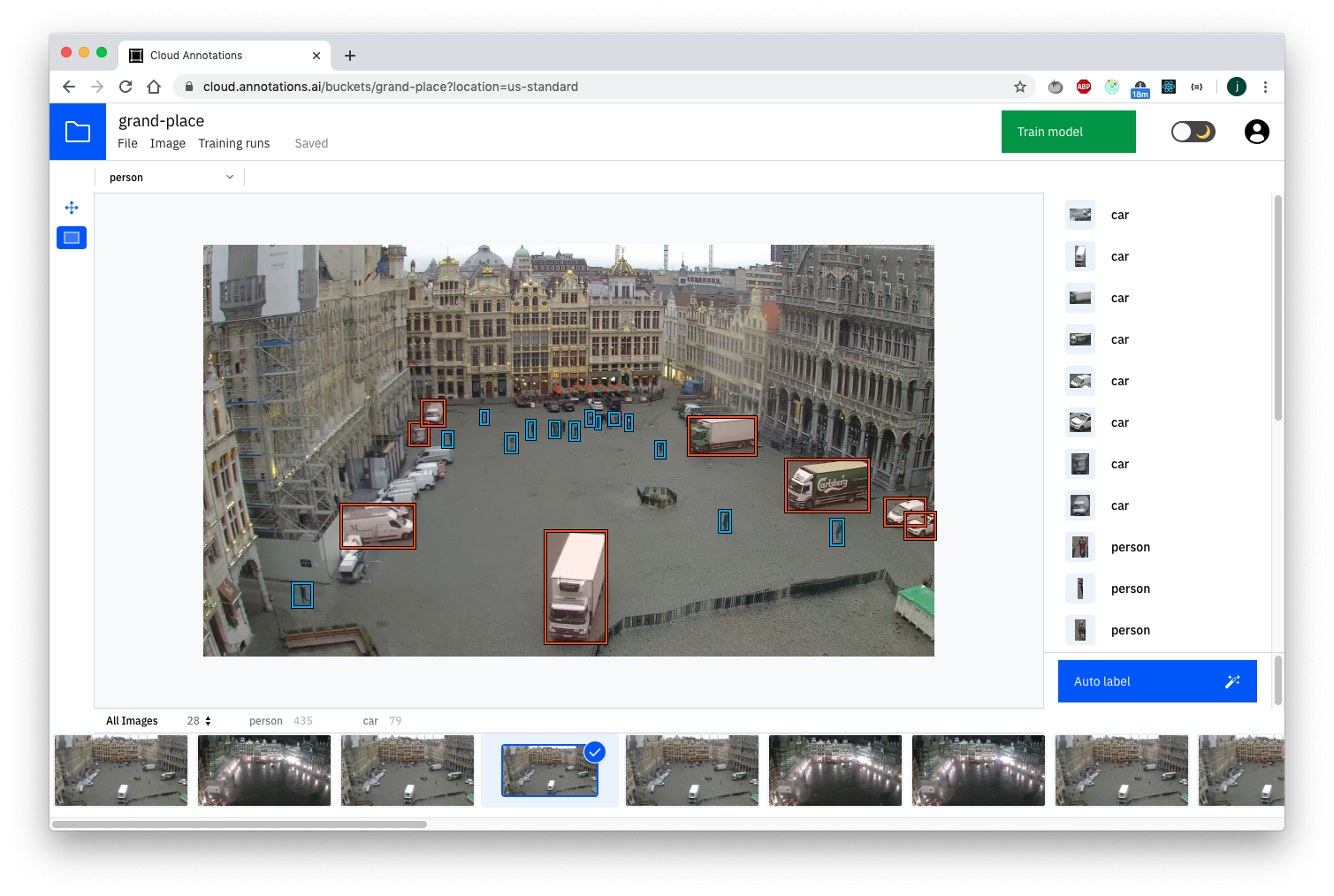

ffmpeg -i http://stream.brucity.be/BXLCAM/CAM-GrandePlace.stream/chunklist_w1781624015.m3u8 -c copy -bsf:a aac_adtstoasc output.mp4ffmpeg -i "output.mp4" "frames/out-%03d.jpg"Then I used IBM’s cloud annotation tool to annotate the model.

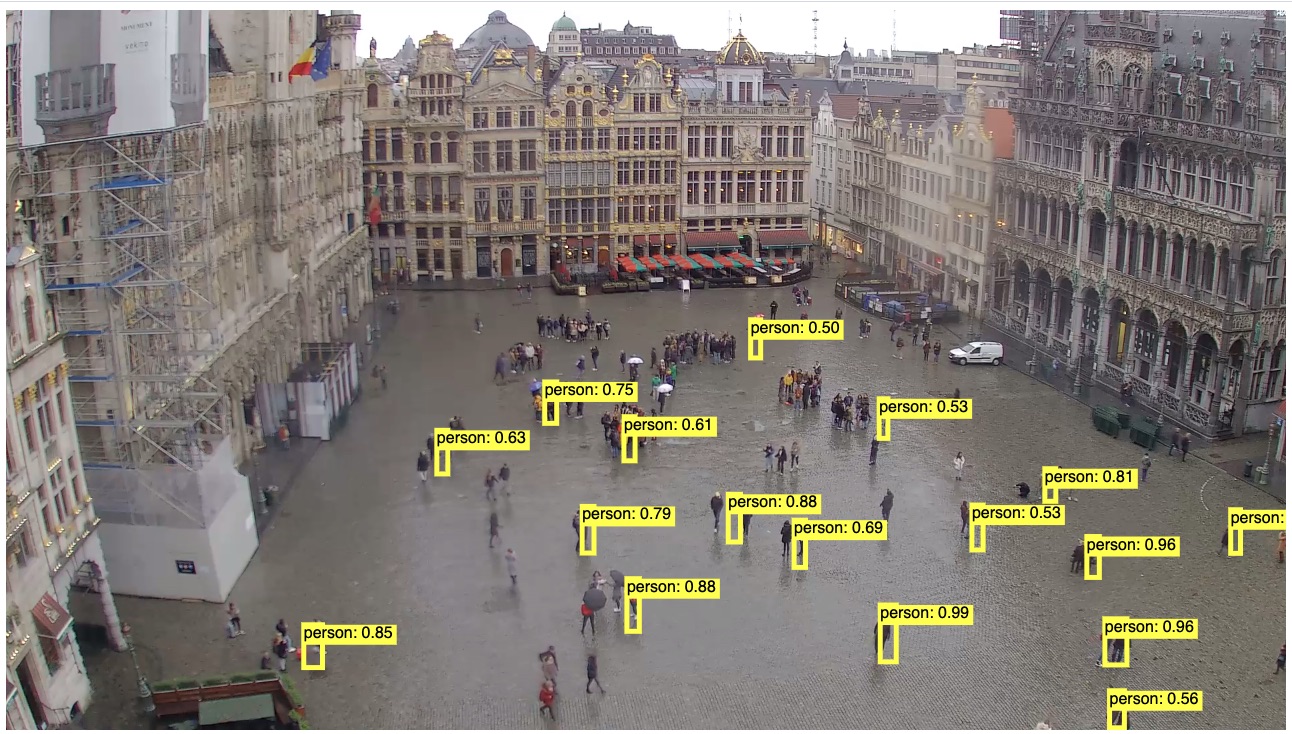

I was surprised on how much the model had improved by providing some more specific training data. This is the result:

The live result can be found here.